#Awstats statistics of generator

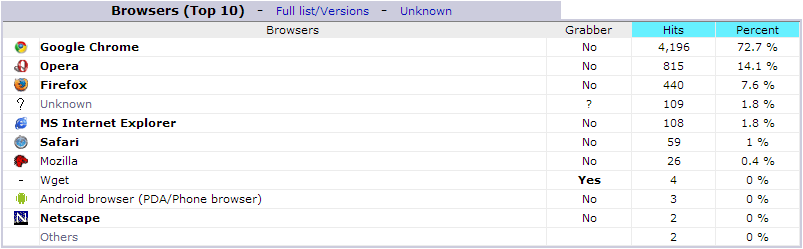

After that the highest download is the surge generator tutorial followed by a strong showing of the SPDC luncheon presentations. This file can be used for commercial advantage and so, although noted, many robots ignore the file instructions. Robots.txt gives instructions on what to visit and what not to visit on a site. This is why the material that the visitor sees must be relevant to their search to keep them viewing.ĭue to the high number of robot and spider visits robots.txt is the most downloaded file. How long do we capture the attention of a visitor for? The answer is mostly for less than 30 s, but on average 245 s. As robots and spiders don’t have access, none of the private areas materials get reported on. Whatever gets posted in the public areas is crawled over and referenced for web searching. Visits and Hits from robots and spiders are comparable with our actual site visitor numbers. Unsurprisingly the US is top, Canada is shows little interest and China is the #3 behind the UK. This record shows how truly international is. In October, just prior to the SPDC meeting, the bandwidth peaks with the transfer of data and functionally from the closing of the IEEE On-line SPD Forum. About 1 MB of data was downloaded in each visit.Īll the monthly numbers rise though the year, except the February viewed page count is down.

Some 220,000 pages were viewed and about 19 GB of data was downloaded. Each clause has a short commentary and the screen capture from the AWStats report.įor the selected period, the year of 2013, had over 12,000 unique visitors making an average of 1.8 visits.

The following clauses show a selection of AWStat records for 2013. Robots leave identification traces behind in the site access logs.

#Awstats statistics of pdf

#Awstats statistics of update

Running '"/usr/share/awstats/-configdir="/etc/awstats"' to update config Ĭreate/Update database for config "/etc/awstats/.conf" by AW Stats version 6.95 (build 1.943)The server host automatically monitors our site traffic using a piece of software called AWStats. Phase 2 : Now process new records (Flush history on disk after 20000 hosts). Searching new records from beginning of log file. Running '"/usr/share/awstats/by AWStats ve rsion 6.95 (build 1.943)įrom data in log file "perl /usr/bin/ standard < /var/log/maill og |". Running '"/usr/share/awstats/-configdir="/etc/awstats"' to update config localhost.localdomainĬreate/Update database for config "/etc/awstats/ nf" by AWStats version 6.95 (build 1.943)

Phase 1 : First bypass old records, searching new record.ĭirect access after last parsed record (after line 16838) Running '"/usr/share/awstats/by AWStats version 6.95 (build 1.943)įrom data in log file "/var/log/httpd/access_log".

0 kommentar(er)

0 kommentar(er)